ODIE DESMITH

GOAL / OR QUESTION:

This project started as an assignment for a class I took at CalArts called Interface Design, where I learned about principles of design for interactivity from Ajay Kapur and Andrew Piepenbrink. In the class we were asked to create a controller for sound or other applications that worked in a new and unique way. In short, it had to be something you couldn’t buy off of a shelf. My goal with this project was to create a multifaceted controller through the use of modern sensor technology, inherent dynamics, and artificial intelligence. I was curious to see if I could design an instrument that could be reapplied to any sonic scenario and produce as many relevant and controllable outputs as necessary for the task. With these considerations in mind, the SQUISHBOI was born.

CULTURE

SQUISHBOI both continues the long history of physical precision within music, and works to bridge the gap between digital systems with dozens of necessary parameters and the human body. As I continue to iterate the design both in terms of software and hardware, it is possible that SQUISHBOI could become useful as a high precision recorder of gestural data for ethnomusicology applications.

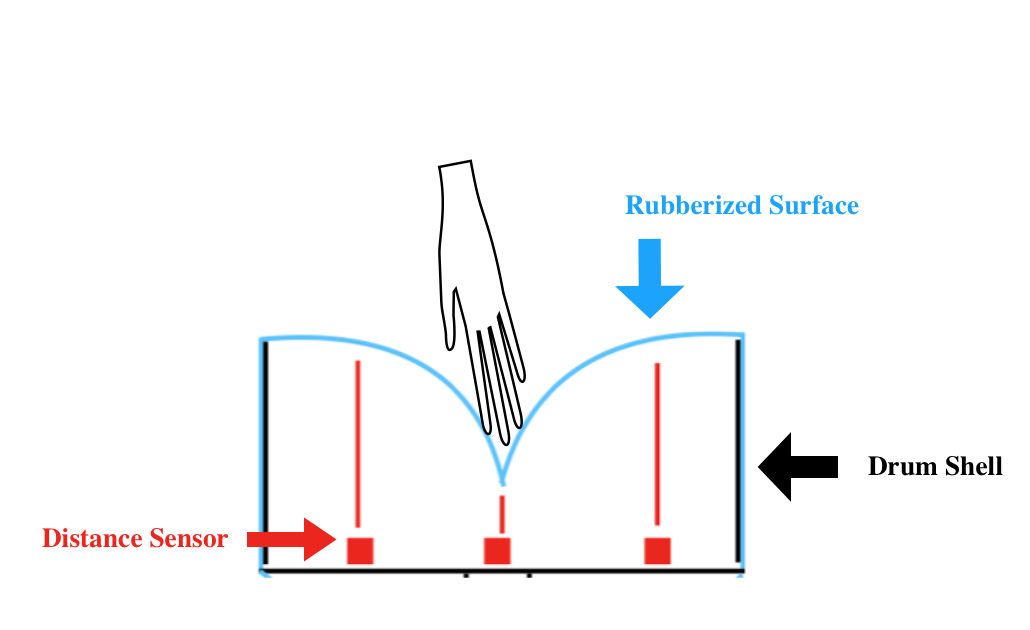

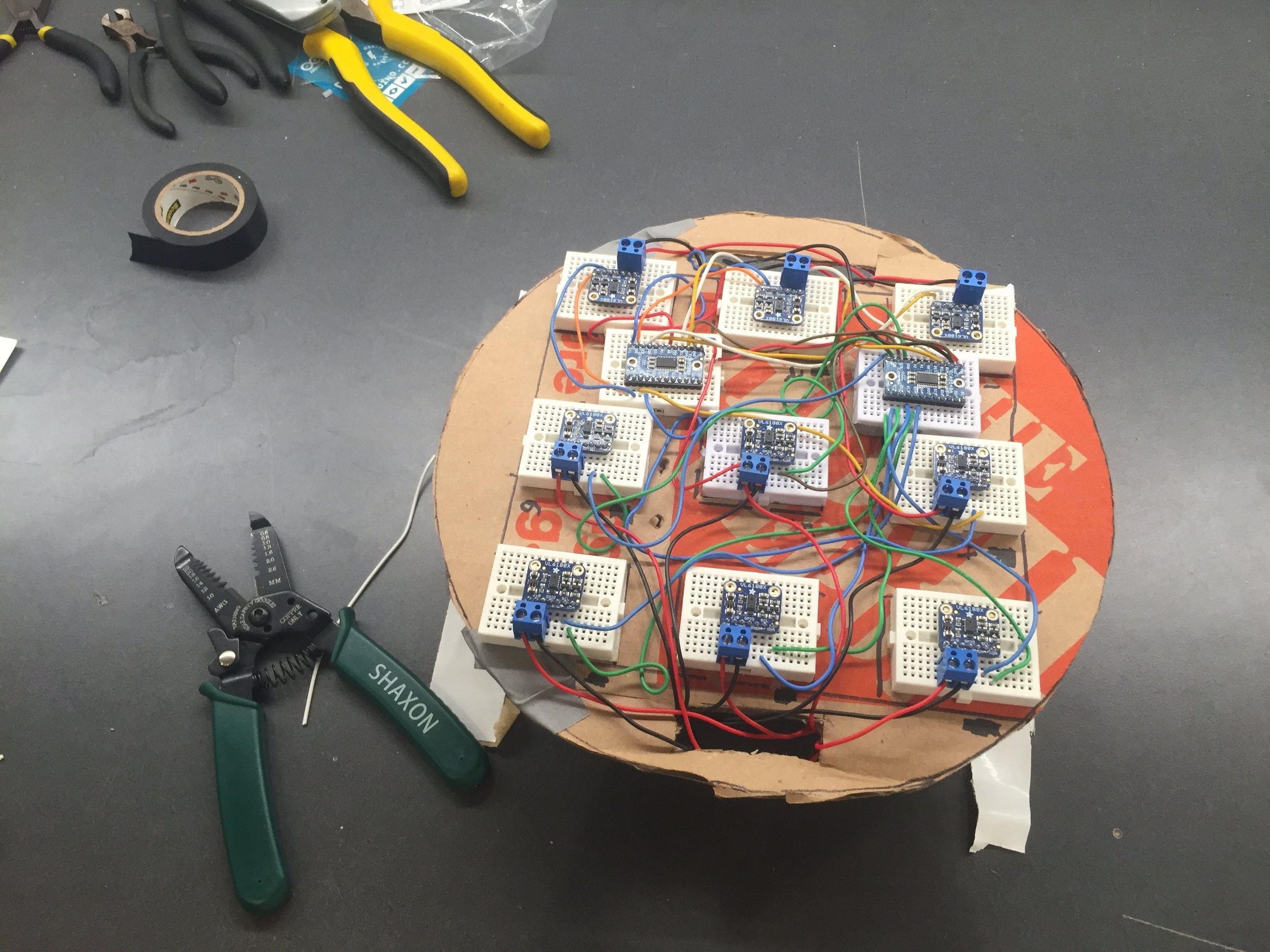

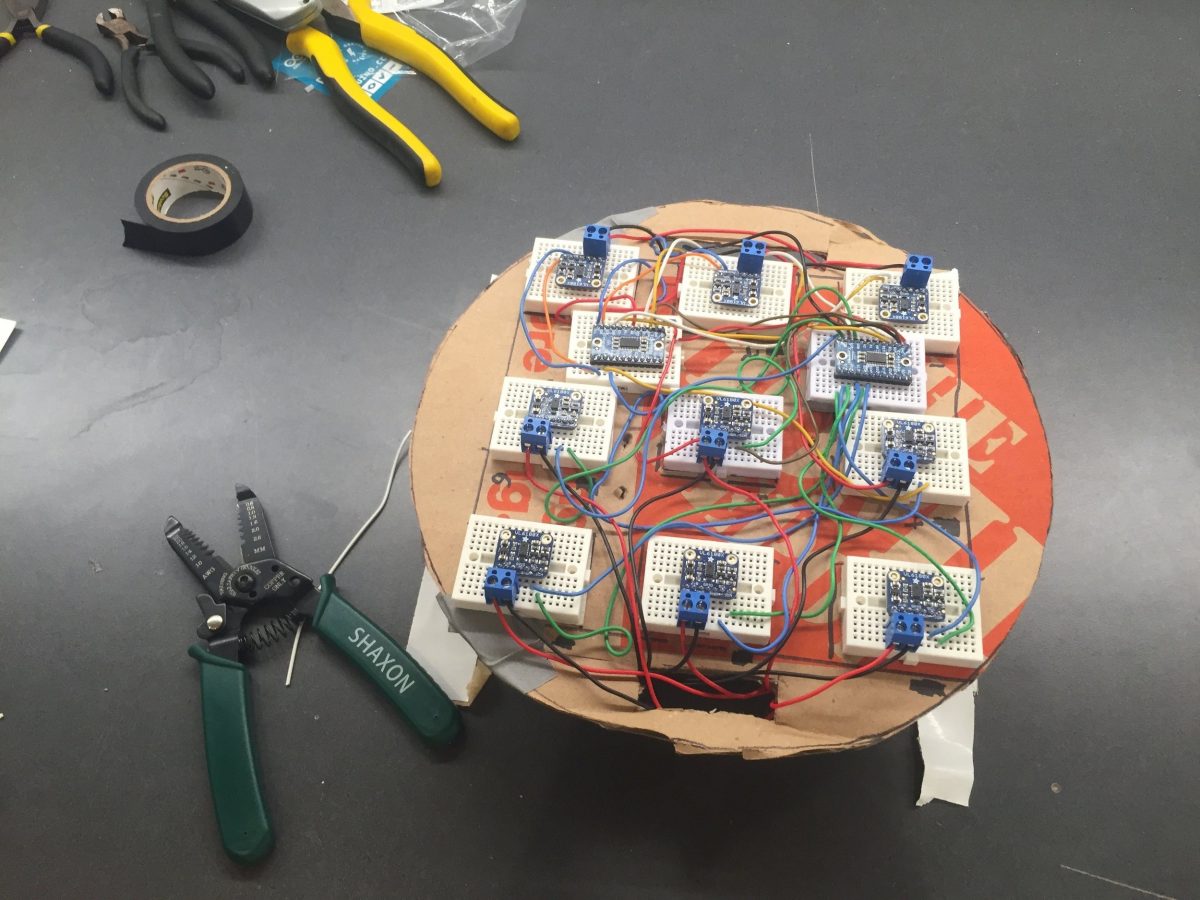

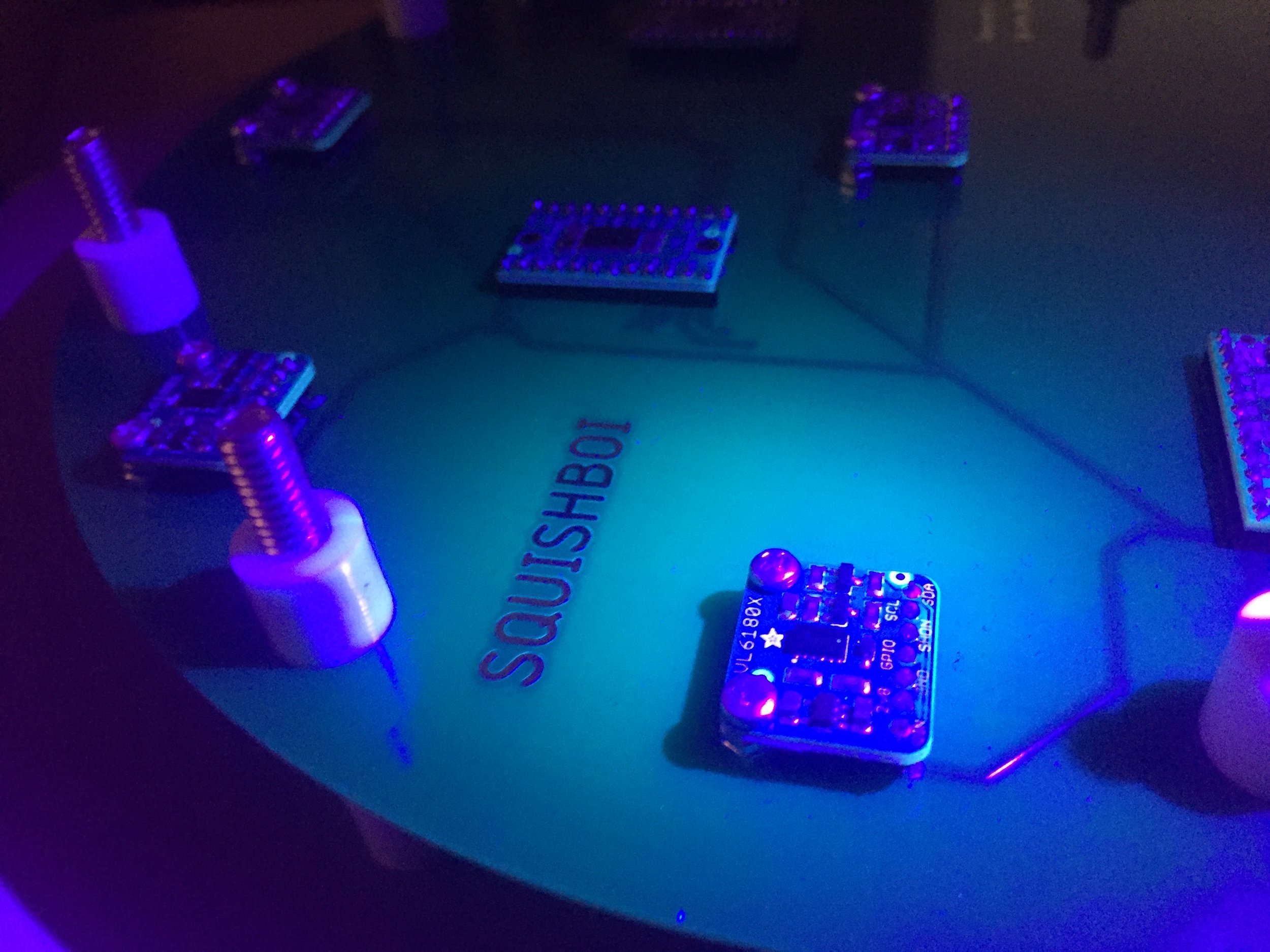

INPUT

SQUISHBOI utilizes cutting edge Time-Of-Flight distance sensors that have only come to market in the last year or two. These micro LIDAR chips are essentially short range single point versions of the sensor technology found in drones and self driving cars! After several rounds of prototyping, I printed a PCB with a 3×3 array of distance sensors to mimic the 3 dimensional sensing abilities of advanced LIDAR cameras that use dot plane projection. The rubberized surface above these sensors gives the data a form of inherent dynamics, squishing any one side will likely have an effect on the rest of the input data. This is especially useful when looking to map SQUISHBOI’s data to interlinked musical parameters within your audio workstation.

RULES

Since I have two SQUISHBOI’s available for this exhibition, I decided to create three training models. Two unique to each SQUISHBOI, and one that listens to data from both simultaneously and creates an abstraction of those active values. I trained the model in Wekinator using live input data from the SQUISHBOI’s. After making a Max patch to package and format the midi message correctly as OSC for Wekinator, I chose to use the default example that interpolates continuous data using either a neural network or polynomial regression. For this application I chose to train using a neural network, as I found the results to be closer to what I was expecting with fewer training examples. For one controller, I attempted to create pseudo XYZ control using the rubberized surface of the SQUISHBOI. This training worked fairly well, and even with the prototype still in its initial stage, the network begins to figure it out when fed enough training data. The second unique mapping was more or less salted to taste to create rhythms that I thought were musically useful, and that I wanted to be able to modulate between on the fly using the SQUISHBOI.

OUTPUT

The output of this system as I discussed above is MIDI data that can be used to control any musical parameters in audio workstations such as Ableton Live. While this is the application I chose for this exhibit, and is likely what I will use it for in the future, nothing is stopping you from using SQUISHBOI as a high precision controller with ML behavior in any software environment that can input either MIDI or OSC data!

SOCIAL / CULTURAL IMPLICATIONS

SQUISHBOI is an interface at the intersection of many different emerging technologies. Both LIDAR and Artificial intelligence are both huge buzzwords right now for good reason, and the relationship between the technologies is really a no brainer! Now that we are beginning to see shorter range LIDAR Time-Of-Flight sensors and cameras on the market, it’s finally possible to use this degree of precision data to train networks for many more creative applications.

ACKNOWLADGEMENTS

Special thanks to Ajay Kapur for pushing students to be on the cutting edge, and to pursue out of the box ideas. I’d also like to thank my close friend, mentor and long time partner on this project Andrew Piepenbrink who’s helped me through more off by one errors than I can count. Without Andrew this project would never have come this far. I’d also like to thank Marijke Jorritsma, my UX teacher this semester at CalArts. Marijke pushed me to explore unexpected forms of visual presentation and led a three week intensive design sprint focused around SQUISHBOI’s presentation in the Walt Disney Modular theatre for the 2019 CalArts expo. Special thanks to Christine Meinders for demystifying AI and for bringing your area of expertise to our school. And last but certainly not least, thanks to SupplyFrame DesignLab and the people who helped to make this event possible!